使用HTTP/2提升性能的7个建议

摘要:

1. HTTP/2是二进制协议,因此更简洁高效

2. 它针对每个域只使用一个多路复用的连接,而不是每个文件一个连接

3. 首部使用特制的HPACK协议压缩

4. HTTP/2设计了复杂的优先级排定规则,帮助浏览器首先请求最急需的文件,而NGINX已经支持

点评:

HTTP/2仅使用一个连接是一个重要的改变,对于客户端可以省去多次建连时间,

对于服务器端能少建立和维护大量的连接(尤其是HTTP/1中那些什么数据也没传过的流)

从理论到实践,全方位认识DNS(理论篇)

点评:

介绍DNS的,逻辑比较清晰。适合作为入门读物

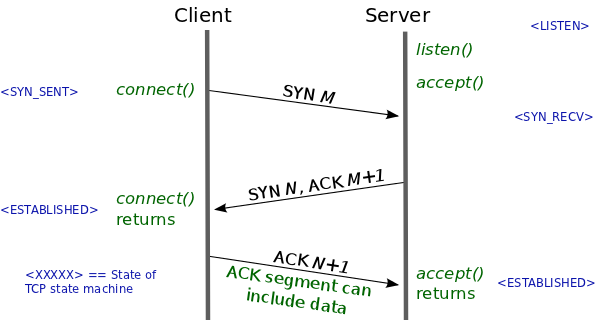

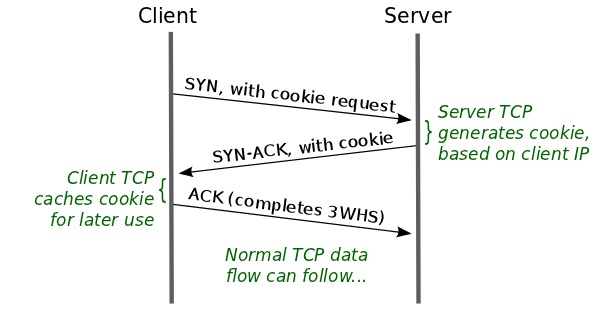

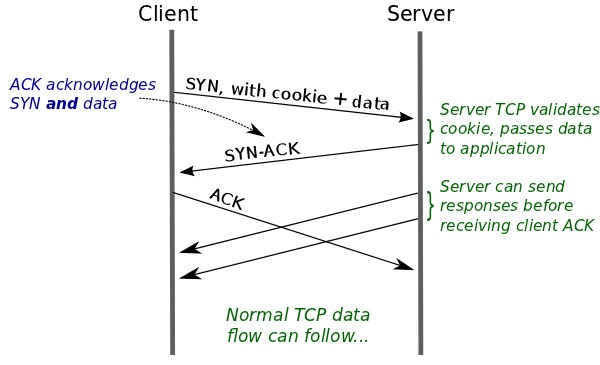

TCP Fast Open: expediting web services

TFO技术暂时还未正式成为标准,不过已经进入了Linux内核的源码中。

了解一下基本原理还是很有必要的,下面摘录三张图来展示TFO的原理和过程。

另外需要注意的是,目前TFO还不推荐在服务设备上开启(LINUX3.10内核默认关闭),

这是因为有些middlebox可能会丢弃携带它不认识的选项的SYN包。

这里是一篇比较好的参考资料

Some relevant networking concepts

点评:

这篇文章中介绍的一些网络工具和网络调优思路很值得借鉴的

A few things about Redis security

点评:

再来点安全的东西,开开脑洞

看完这个作者的文章没几天,国内各大安全厂商就开始爆料国内的该漏洞的安全状态

还真不好是喜是忧的好。

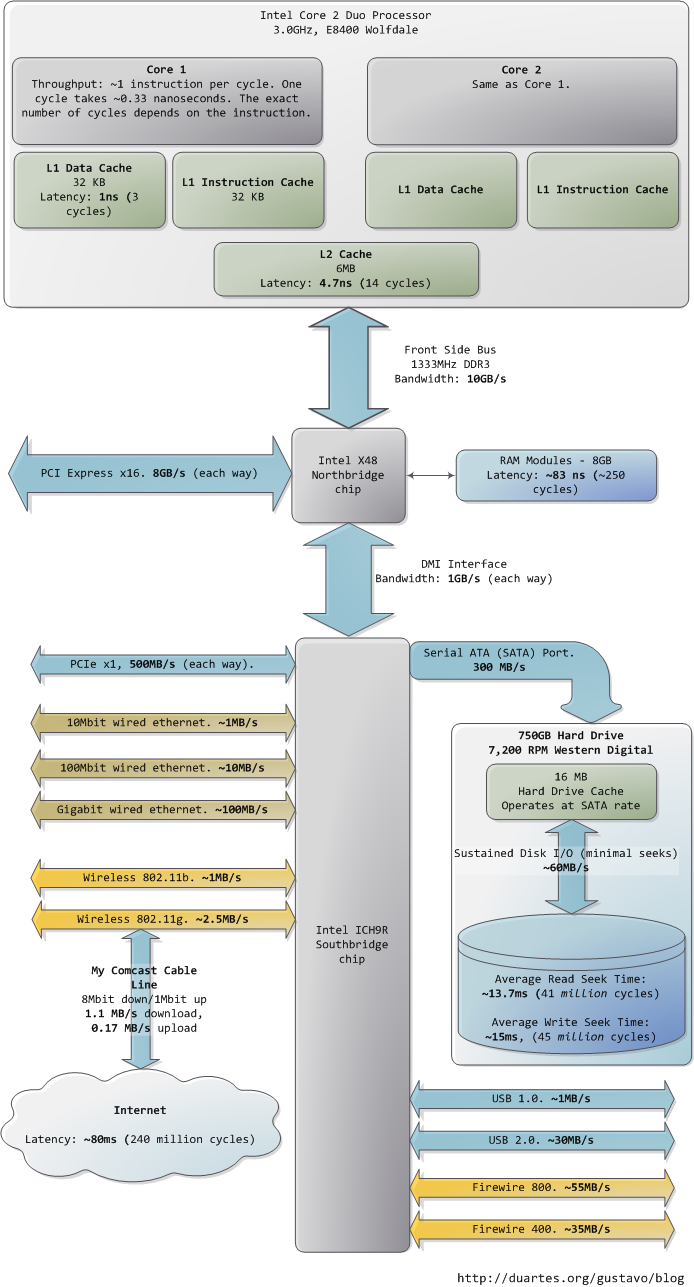

What Your Computer Does While You Wait

摘要:

1. The first thing that jumps out is how absurdly fast our processors are.

Most simple instructions on the Core 2 take one clock cycle to execute,

hence a third of a nanosecond at 3.0Ghz.

For reference, light only travels ~4 inches (10 cm) in the time taken by a clock cycle.

It’s worth keeping this in mind when you’re thinking of optimization – instructions are comically cheap to execute nowadays.

2. As the CPU works away, it must read from and write to system memory,

which it accesses via the L1 and L2 caches. The caches use static RAM,

a much faster (and expensive) type of memory than the DRAM memory used as the main system memory.

The caches are part of the processor itself and for the pricier memory we get very low latency.

3. Here we see our first major hit, a massive ~250 cycles of latency that often leads to a stall,

when the CPU has no work to do while it waits.

To put this into perspective, reading from L1 cache is like grabbing a piece of paper from your desk (3 seconds),

L2 cache is picking up a book from a nearby shelf (14 seconds),

and main system memory is taking a 4-minute walk down the hall to buy a Twix bar.

点评:

了解各个部件之间的latency概况,算是一个*专业人士*的必备知识吧,哈哈

本文写的非常的生动,解释访问速度时都有非常智能能理解的例子做对比。

既然如此,那就再贴张文章中的图.

我是如何从3亿IP中找到CISCO后门路由器的

点评:再开开脑洞...

不过freebuf的原文被删了,吓得我赶紧备份了网页原文。